📝 Selected Publications

This list is not frequently updated. Please see my Google Scholar for the full record and recent update. (*Corresponding Author, =Equal Contributions.)

Preprint/Submitted Manuscript

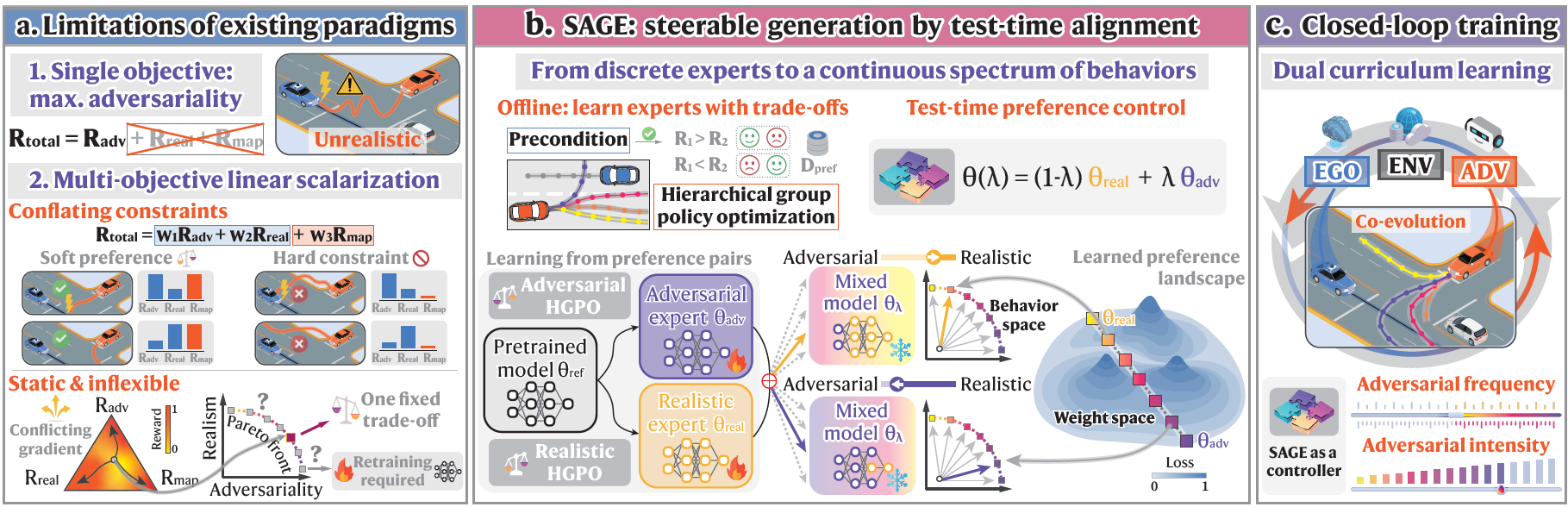

| Steerable Adversarial Scenario Generation through Test-Time Preference Alignment, Tong Nie (=), Yuewen Mei (=), Yihong Tang, Junlin He, Jie Sun, Haotian Shi, Wei Ma*, Jian Sun*. ICLR, 2026. | [Paper] | [webpage] |

TL;DR: We introduce a new paradigm for adversarial scenario generation with test-time steerability.

Journal Publications

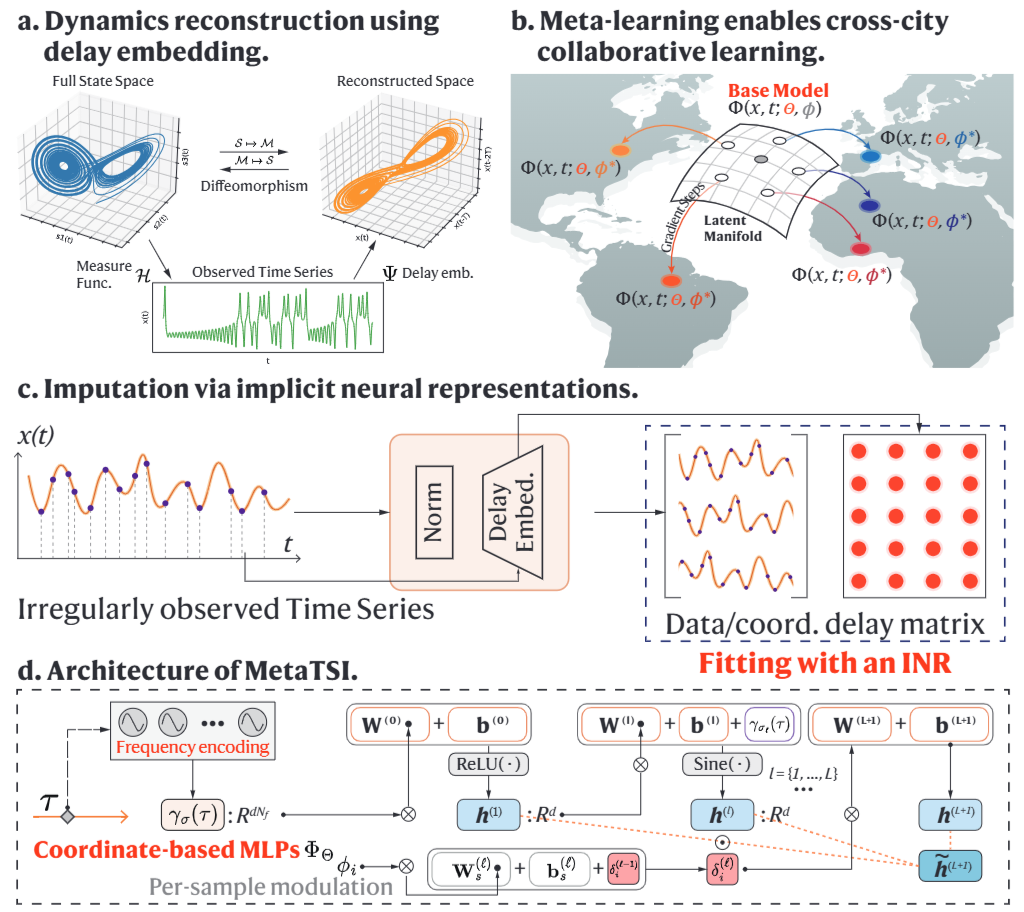

| Collaborative imputation of urban time series through cross-city meta-learning, Tong Nie, Wei Ma*, Jian Sun*, Yu Yang, Jiannong Cao. ICLR Workshop on Weight Space Learning / IEEE Transactions on Knowledge and Data Engineering (TKDE), 2025. | [Paper] |

TL;DR: We introduce a cross-city collaborative time series imputation method based on meta learning implicit neural representations.

| Predicting Large-scale Urban Network Dynamics with Energy-informed Graph Neural Diffusion, Tong Nie, Jian Sun, Wei Ma*. IEEE Transactions on Industrial Informatics, 2025. | [Paper] |

TL;DR: A principled interpretable neural diffusion scheme based on Transformer-like structures for modeling large-scale urban networked systems.

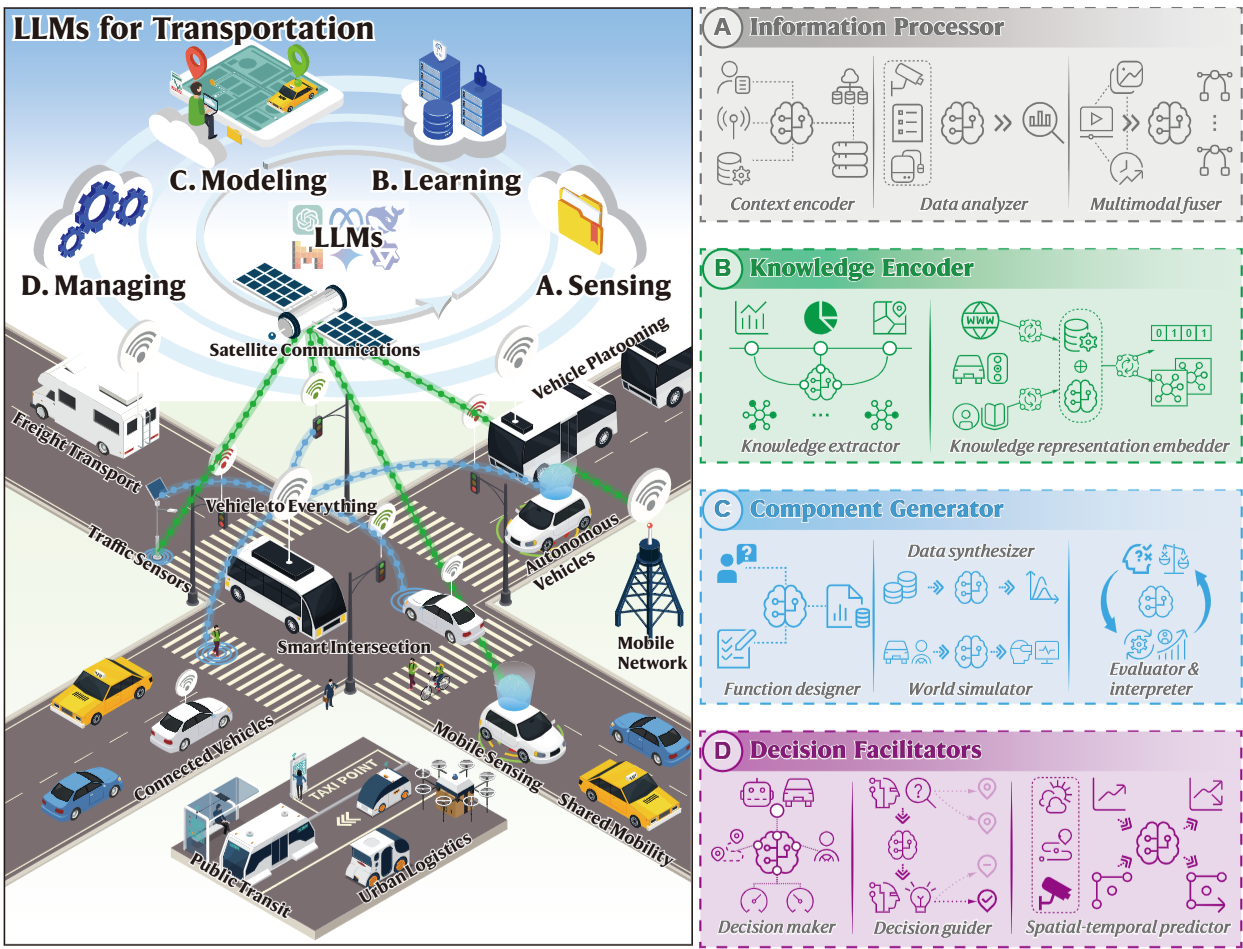

| Exploring the Roles of Large Language Models in Reshaping Transportation Systems: A Survey, Framework, and Roadmap, Tong Nie, Jian Sun, Wei Ma*. Artificial Intelligence for Transportation (Inaugural Issue), 2025. | [Paper] | [Project] |

TL;DR: We introduce the first systematic survey of LLMs in transportation systems from a methodological perspective.

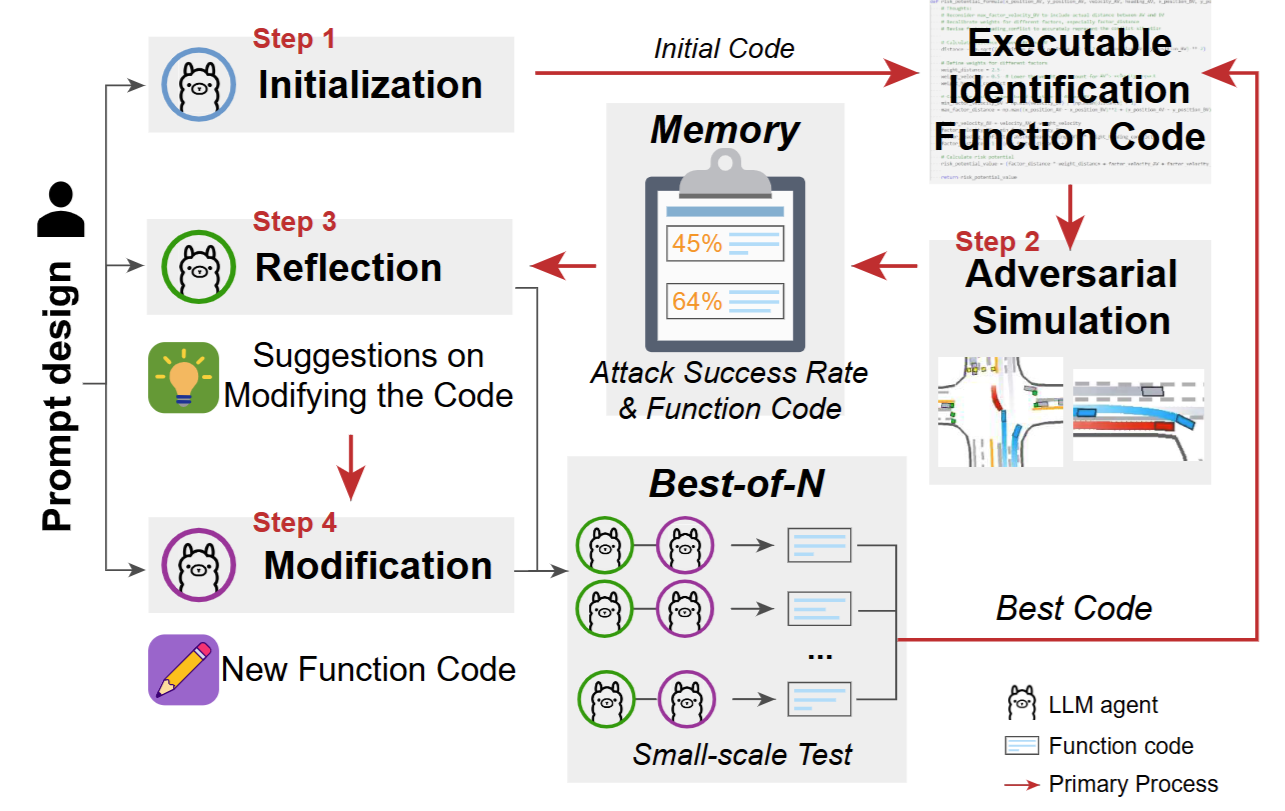

| LLM-attacker: Enhancing Closed-loop Adversarial Scenario Generation for Autonomous Driving with Large Language Models, Yuewen Mei, Tong Nie, Jian Sun*, Ye Tian. IEEE Transactions on Intelligent Transportation Systems, 2025. | [Paper] | [Video] |

TL;DR: We introduce an LLM-enhanced closed-loop adversarial scenario generation method for the testing of CAVs.

| Joint Estimation and Prediction of City-wide Delivery Demand: A Large Language Model Empowered Graph-based Learning Approach, Tong Nie, Junlin He, Yuewen Mei, Guoyang Qin, Guilong Li, Jian Sun*, Wei Ma*. Transportation Research Part E: Logistics and Transportation Review, 2025. | [Paper] | [Code] |

TL;DR: A transferable traffic demand predictor and estimator enhanced by LLM-based encoding and graphs.

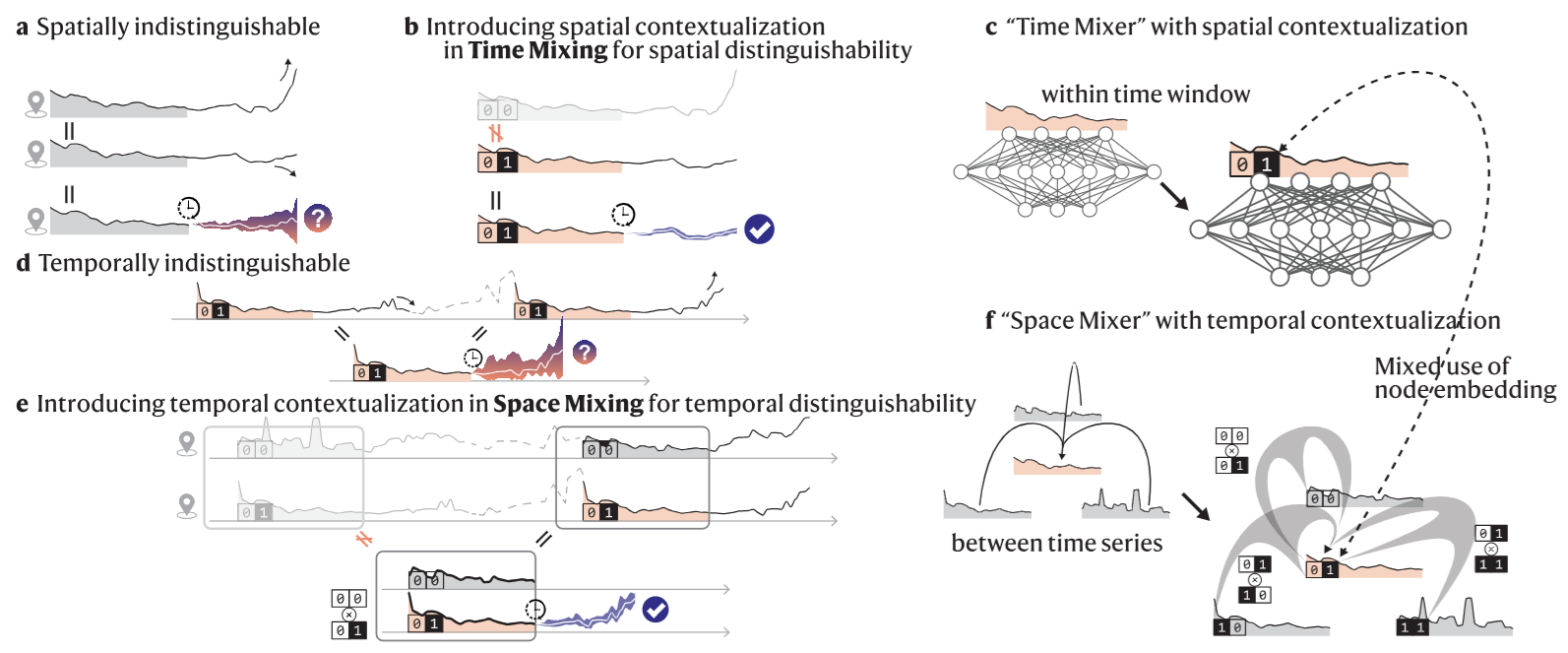

| Contextualizing MLP-Mixers Spatiotemporally for Urban Traffic Data Forecast at Scale, Tong Nie(=), Guoyang Qin(=), Lijun Sun, Wei Ma, Yu Mei, Jian Sun*. IEEE Transactions on Intelligent Transportation Systems, 2025. | [Paper] |

TL;DR: A simple-yet-effective MLP-based architecture for large-scale urban data forecasting.

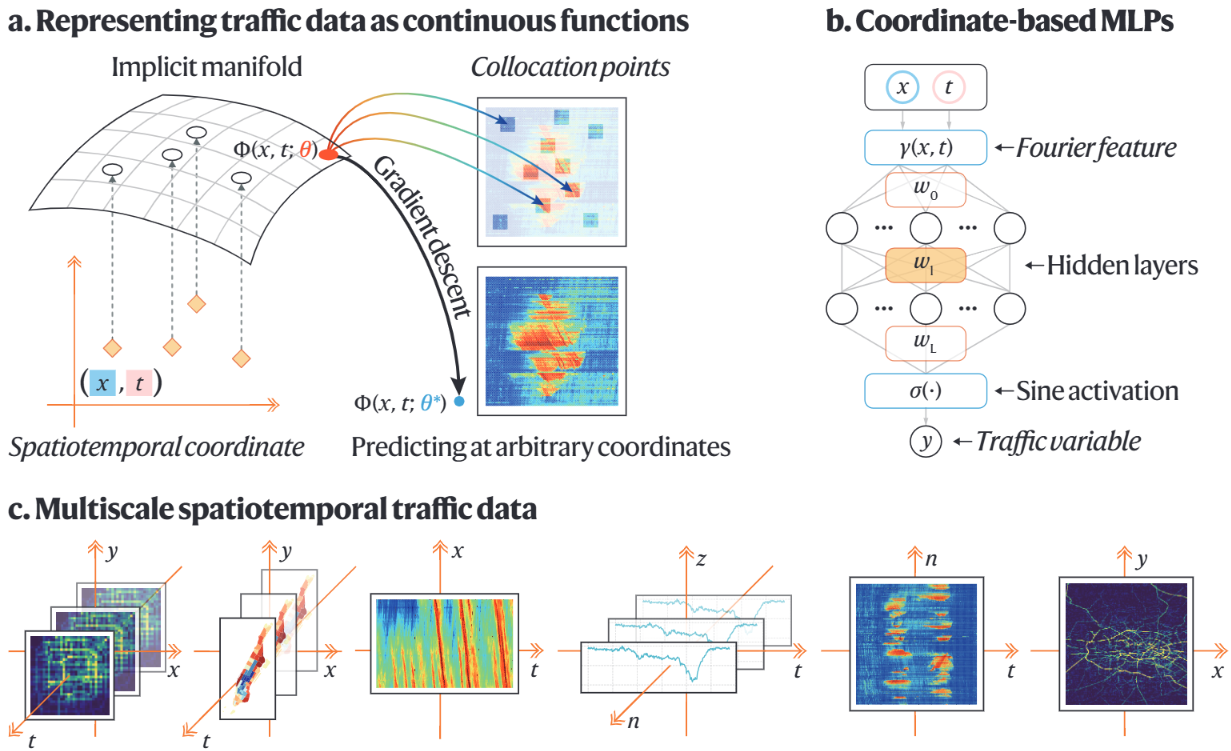

| Spatiotemporal Implicit Neural Representation as a Generalized Traffic Data Learner, Tong Nie, Guoyang Qin, Wei Ma*, Jian Sun*. Transportation Research Part C: Emerging Technologies, 2024. | [Paper] | [Code] |

TL;DR: A new paradigm for spatiotemporal traffic data learning using implicit neural representations.

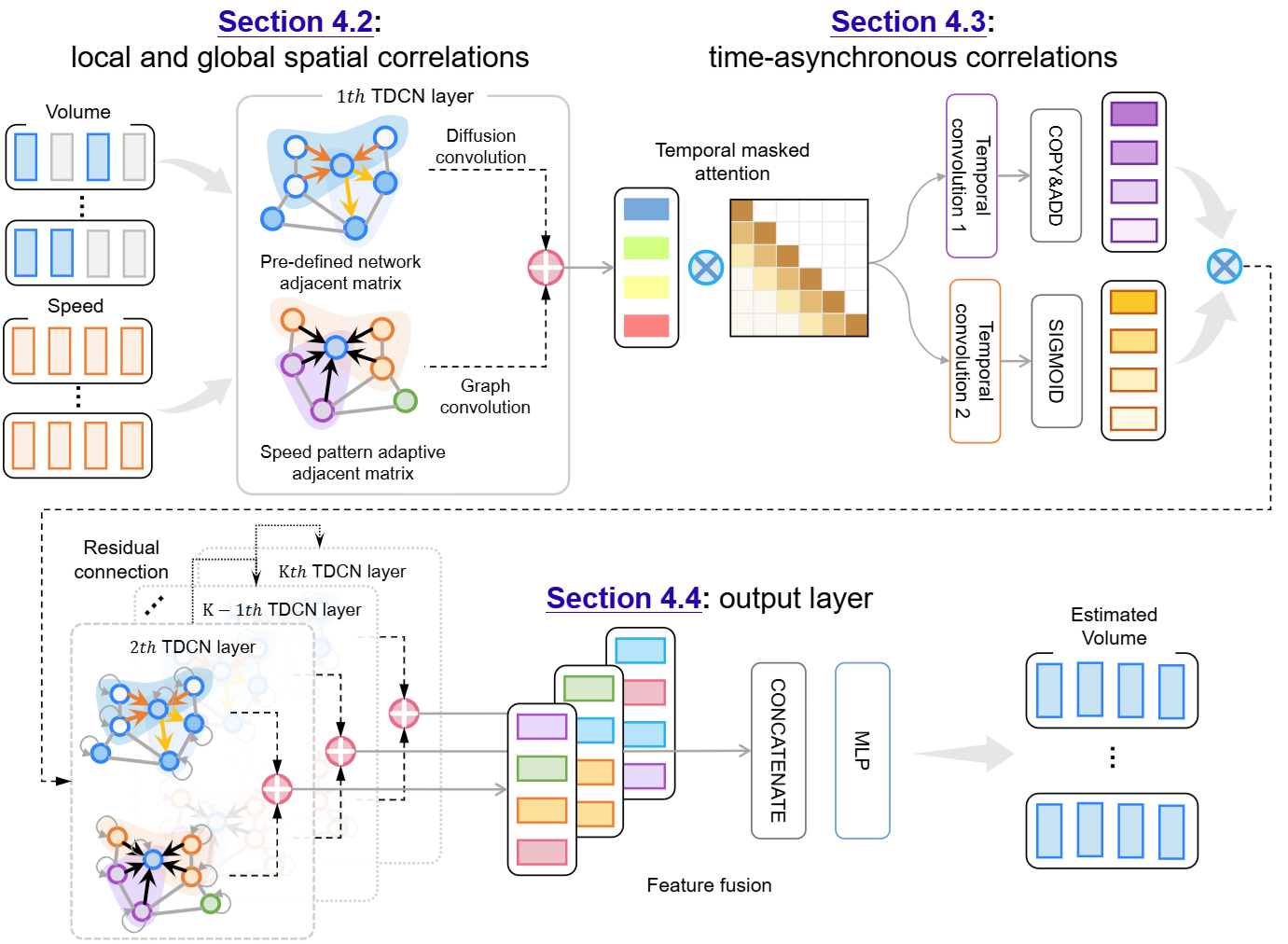

| Towards better traffic volume estimation: Jointly addressing the underdetermination and nonequilibrium problems with correlation-adaptive GNNs, Tong Nie, Guoyang Qin, Yunpeng Wang, Jian Sun*. Transportation Research Part C: Emerging Technologies, 2023. | [Paper] | [Code] |

TL;DR: A graph neural network model for unobserved traffic flow estimation considering speed-volume relationship.

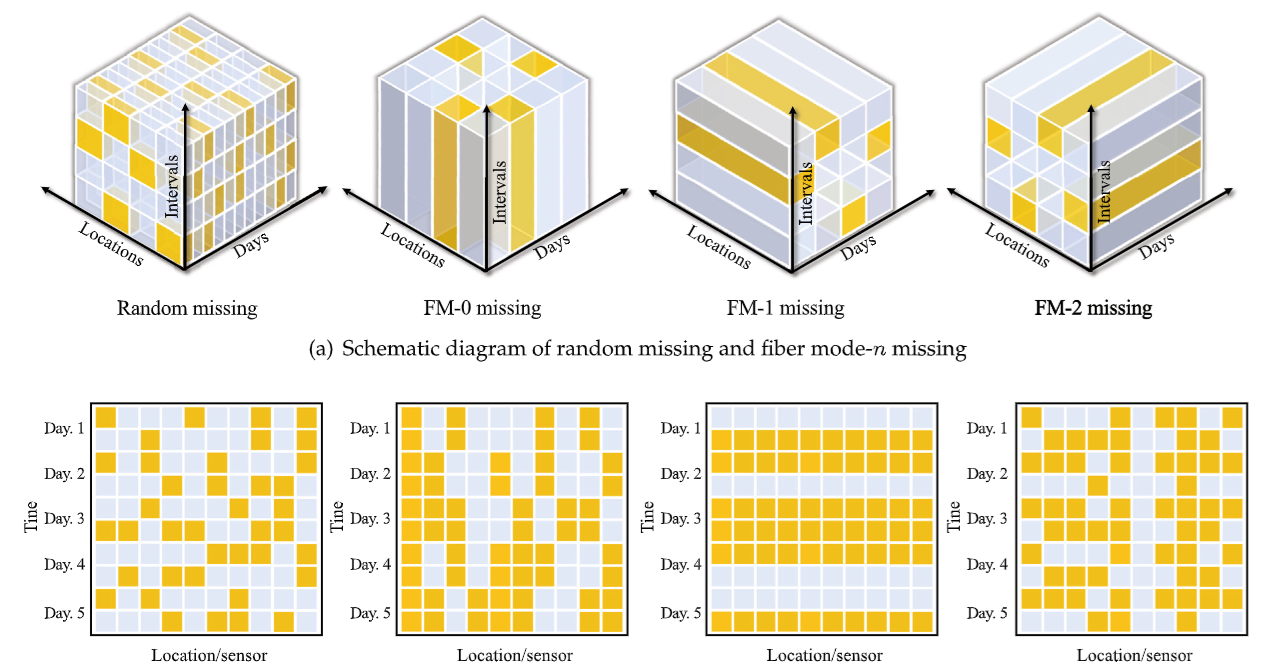

| Correlating sparse sensing for large-scale traffic speed estimation: A Laplacian-enhanced low-rank tensor kriging approach, Tong Nie, Guoyang Qin, Yunpeng Wang, Jian Sun*. Transportation Research Part C: Emerging Technologies, 2023. | [Paper] | [Code] |

TL;DR: A Laplacian regularized tensor completion model for unobserved traffic speed estimation on large-scale highway networks.

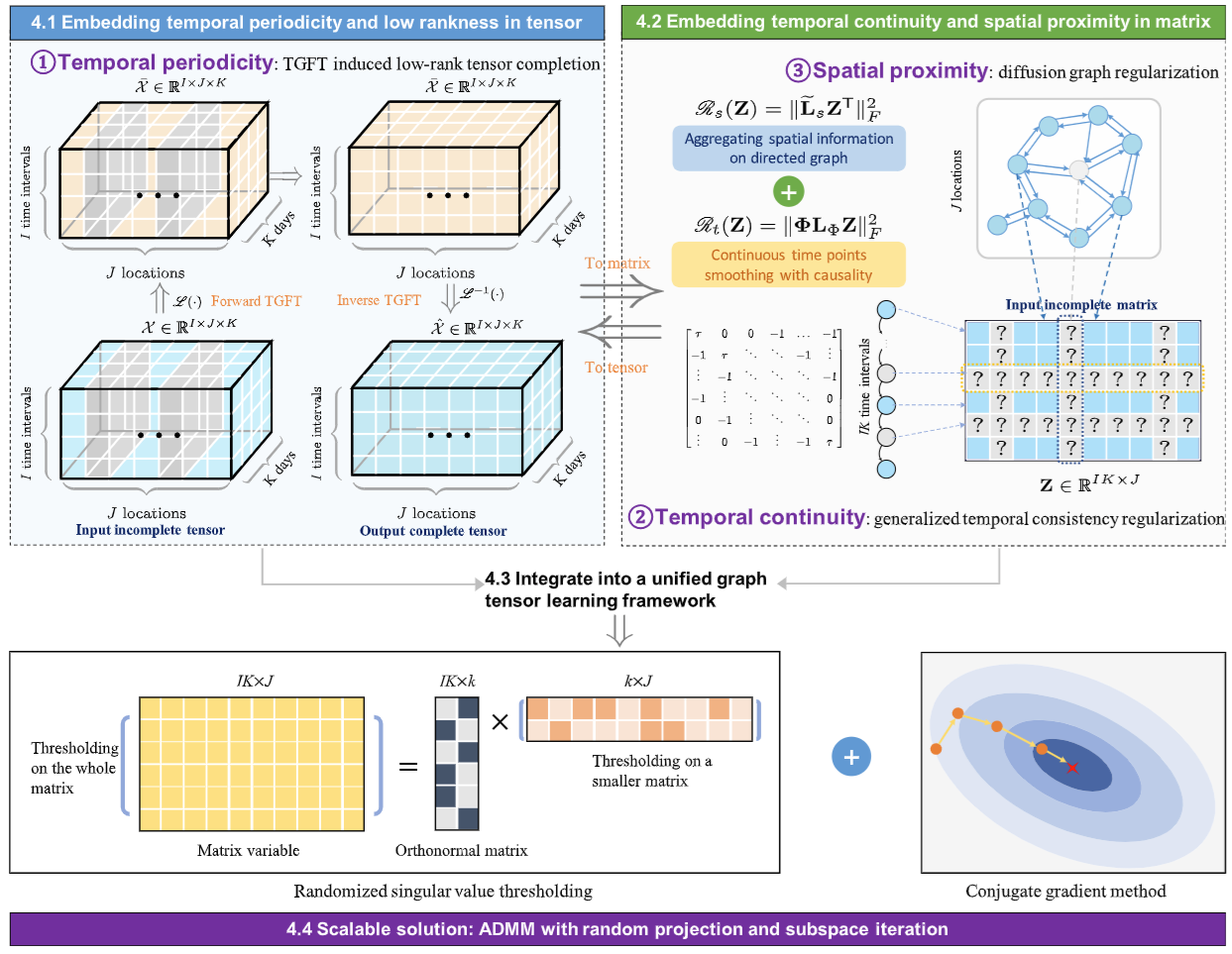

| Truncated tensor Schatten p-norm based approach for spatiotemporal traffic data imputation with complicated missing patterns, Tong Nie, Guoyang Qin, Jian Sun*. Transportation Research Part C: Emerging Technologies, 2022. | [Paper] | [Code] |

TL;DR: A tensor completion model optimized by ADMM for sparse traffic data imputation.

CS/AI Conference Proceedings

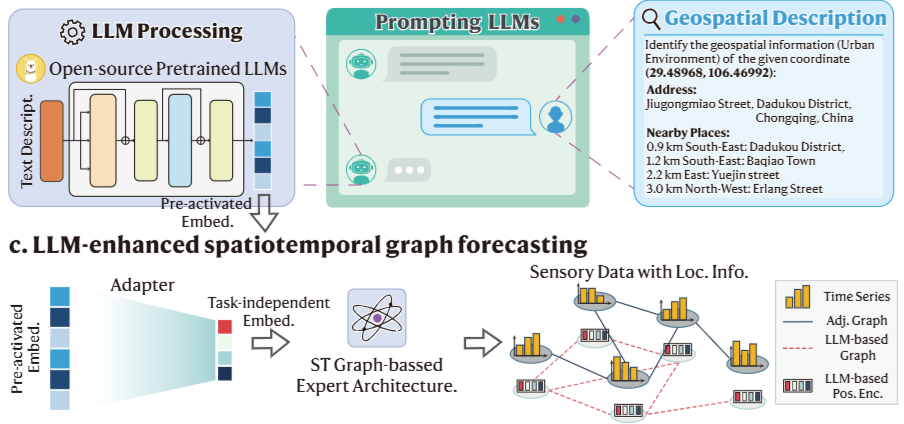

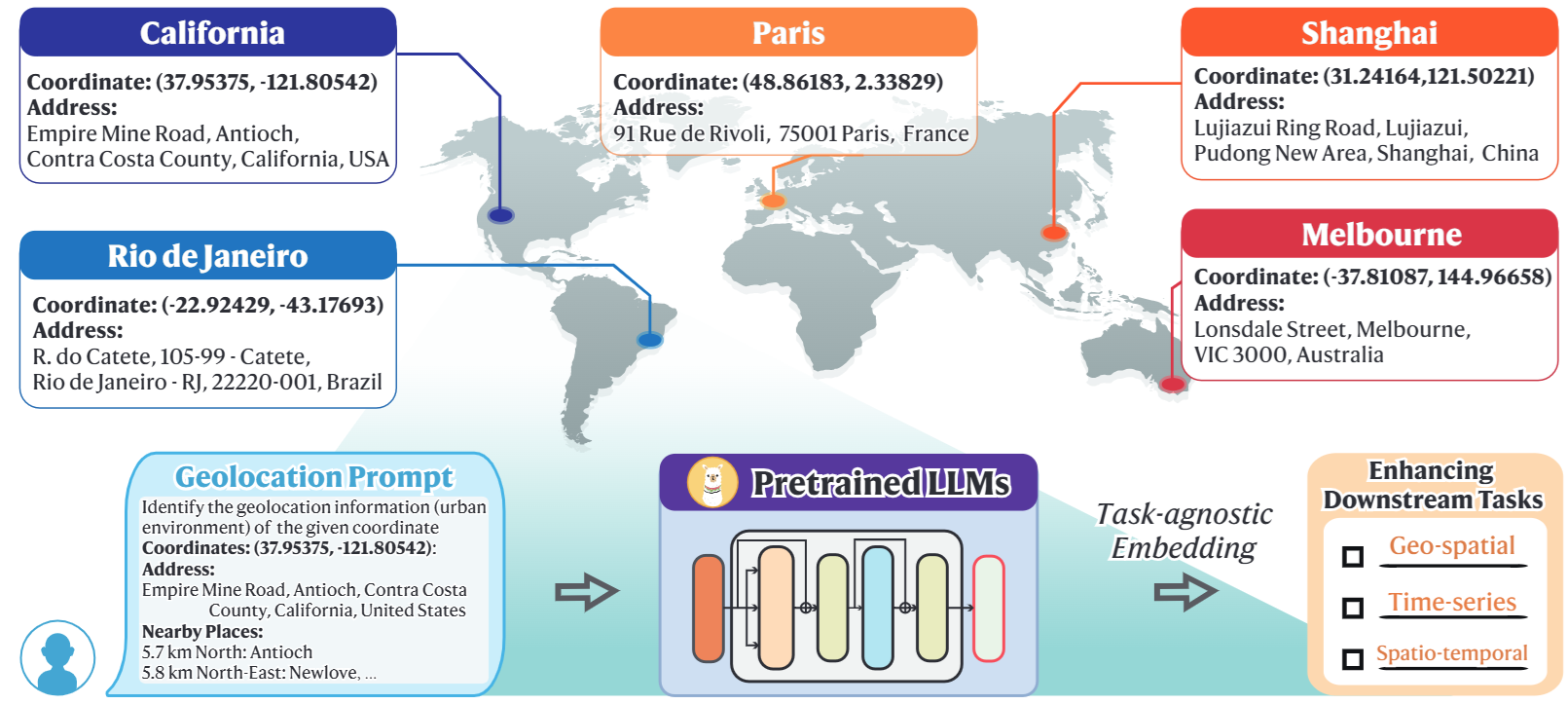

| Geolocation Representation from Large Language Models are Generic Enhancers for Spatio-Temporal Learning, Junlin He(=), Tong Nie(=), Wei Ma*. AAAI, 2025. | [Paper] | [Code] |

TL;DR: A training-free method to extract generic geospatial encoding from LLMs that can enhance various downstream predictive learning tasks.

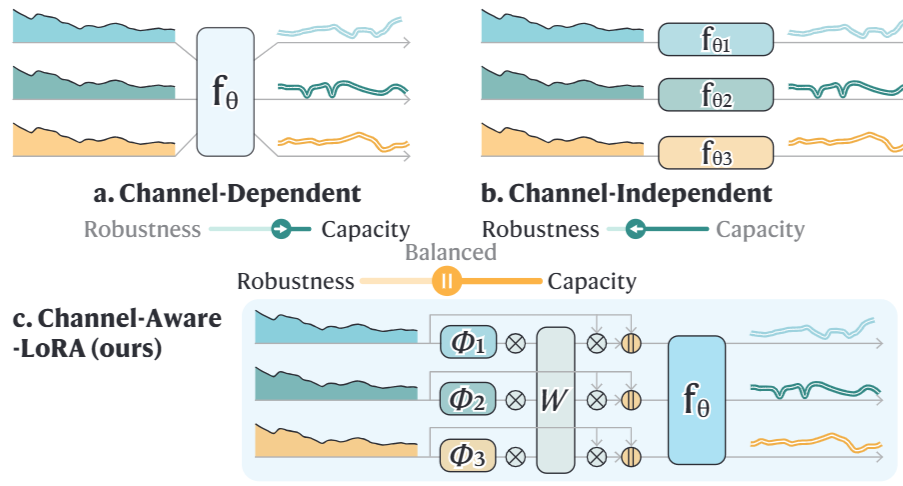

| Channel-Aware Low-Rank Adaptation in Time Series Forecasting, Tong Nie, Yuewen Mei, Guoyang Qin, Jian Sun, Wei Ma*. CIKM, 2024. | [Paper] | [Code] |

TL;DR: A channel-aware low-rank adaptation method to balance channel independence and channel dependence.

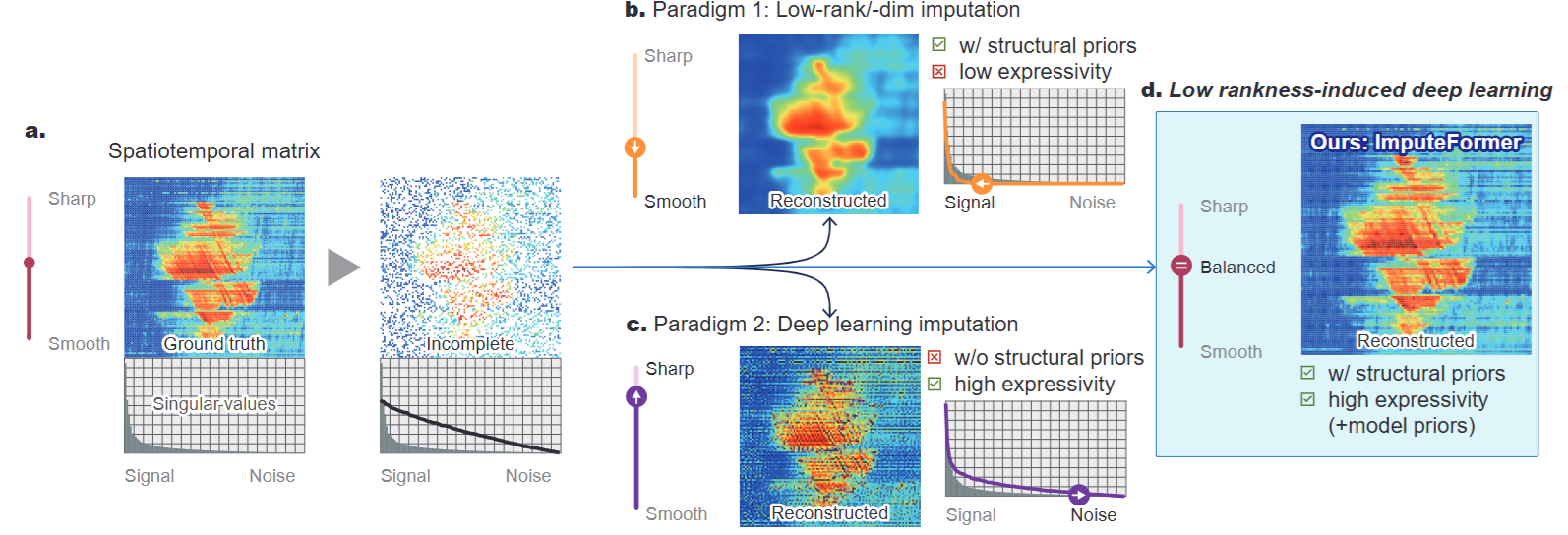

| ImputeFormer: Low Rankness-Induced Transformers for Generalizable Spatiotemporal Imputation, Tong Nie, Guoyang Qin, Wei Ma, Yuewen Mei, Jian Sun*. KDD, 2024. | [Paper] | [Code] |

TL;DR: A generalizable Transformer model for spatiotemporal data imputation, achieving SOTA performances and great efficiency.

| Seeking to Collide: Online Safety-Critical Scenario Generation for Autonomous Driving with Retrieval Augmented Large Language Models, Yuewen Mei, Tong Nie*, Jian Sun, Ye Tian. IEEE ITSC, 2025. | [Paper] |

TL;DR: We introduce an online safety-critical scenario generation method for autonomous driving using retrieval-augmented LLMs.